How US intelligence, an American company feed Israel’s killing machine in Gaza

By far, the most secret of Israel’s intelligence instillations in Camp Moshe Dayan is the headquarters of Unit 8200, which specializes in eavesdropping, codebreaking, and cyber warfare — Israel’s equivalent of the American National Security Agency. One of Unit 8200’s newest and most important organizations is the Data Science and Artificial Intelligence Center, which, according to a spokesman, was responsible for developing the AI systems that “transformed the entire concept of targets in the IDF”. Back in 2021, the Israeli military described its 11-day war on Gaza as the world’s first “AI war”. Israel’s ongoing invasion of Gaza offers a more recent — and devastating — example. More than 70 years ago, that same patch of land was home to the Palestinian village of Ajleel, until the residents were killed or forced to abandon their homes and flee in fear during the Nakba in 1948. Now, soldiers and intelligence specialists are being trained at Camp Moshe Dayan to finish the job — to bomb, shoot, or starve to death the descendants of the Palestinians forced into the squalor of militarily occupied Gaza decades ago.

James Bamford

Best-selling author

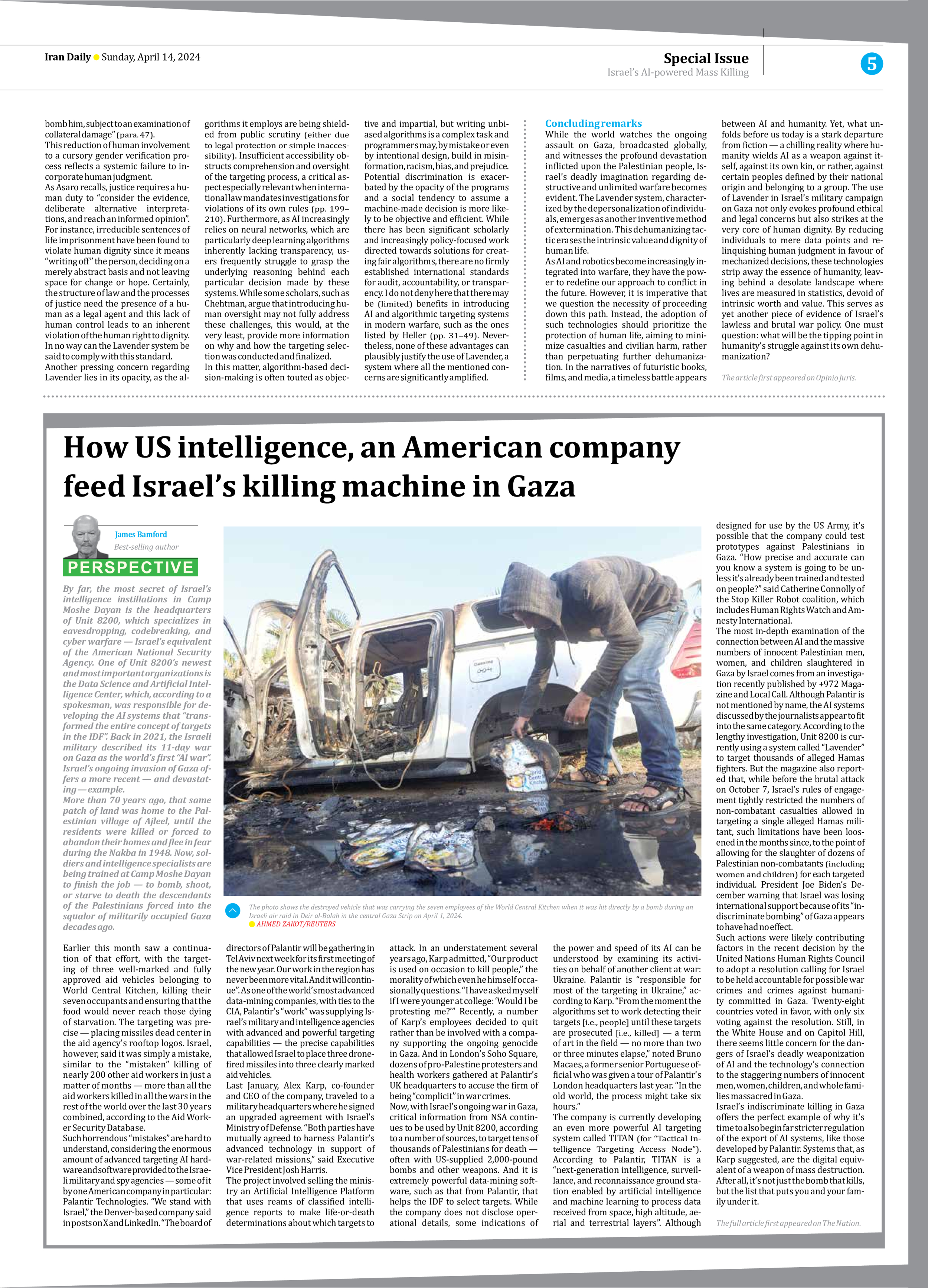

Earlier this month saw a continuation of that effort, with the targeting of three well-marked and fully approved aid vehicles belonging to World Central Kitchen, killing their seven occupants and ensuring that the food would never reach those dying of starvation. The targeting was precise — placing missiles dead center in the aid agency’s rooftop logos. Israel, however, said it was simply a mistake, similar to the “mistaken” killing of nearly 200 other aid workers in just a matter of months — more than all the aid workers killed in all the wars in the rest of the world over the last 30 years combined, according to the Aid Worker Security Database.

Such horrendous “mistakes” are hard to understand, considering the enormous amount of advanced targeting AI hardware and software provided to the Israeli military and spy agencies — some of it by one American company in particular: Palantir Technologies. “We stand with Israel,” the Denver-based company said in posts on X and LinkedIn. “The board of directors of Palantir will be gathering in Tel Aviv next week for its first meeting of the new year. Our work in the region has never been more vital. And it will continue”. As one of the world’s most advanced data-mining companies, with ties to the CIA, Palantir’s “work” was supplying Israel’s military and intelligence agencies with advanced and powerful targeting capabilities — the precise capabilities that allowed Israel to place three drone-fired missiles into three clearly marked aid vehicles.

Last January, Alex Karp, co-founder and CEO of the company, traveled to a military headquarters where he signed an upgraded agreement with Israel’s Ministry of Defense. “Both parties have mutually agreed to harness Palantir’s advanced technology in support of war-related missions,” said Executive Vice President Josh Harris.

The project involved selling the ministry an Artificial Intelligence Platform that uses reams of classified intelligence reports to make life-or-death determinations about which targets to attack. In an understatement several years ago, Karp admitted, “Our product is used on occasion to kill people,” the morality of which even he himself occasionally questions. “I have asked myself if I were younger at college: ‘Would I be protesting me?’” Recently, a number of Karp’s employees decided to quit rather than be involved with a company supporting the ongoing genocide in Gaza. And in London’s Soho Square, dozens of pro-Palestine protesters and health workers gathered at Palantir’s UK headquarters to accuse the firm of being “complicit” in war crimes.

Now, with Israel’s ongoing war in Gaza, critical information from NSA continues to be used by Unit 8200, according to a number of sources, to target tens of thousands of Palestinians for death — often with US-supplied 2,000-pound bombs and other weapons. And it is extremely powerful data-mining software, such as that from Palantir, that helps the IDF to select targets. While the company does not disclose operational details, some indications of the power and speed of its AI can be understood by examining its activities on behalf of another client at war: Ukraine. Palantir is “responsible for most of the targeting in Ukraine,” according to Karp. “From the moment the algorithms set to work detecting their targets [i.e., people] until these targets are prosecuted [i.e., killed] — a term of art in the field — no more than two or three minutes elapse,” noted Bruno Macaes, a former senior Portuguese official who was given a tour of Palantir’s London headquarters last year. “In the old world, the process might take six hours.”

The company is currently developing an even more powerful AI targeting system called TITAN (for “Tactical Intelligence Targeting Access Node”). According to Palantir, TITAN is a “next-generation intelligence, surveillance, and reconnaissance ground station enabled by artificial intelligence and machine learning to process data received from space, high altitude, aerial and terrestrial layers”. Although designed for use by the US Army, it’s possible that the company could test prototypes against Palestinians in Gaza. “How precise and accurate can you know a system is going to be unless it’s already been trained and tested on people?” said Catherine Connolly of the Stop Killer Robot coalition, which includes Human Rights Watch and Amnesty International.

The most in-depth examination of the connection between AI and the massive numbers of innocent Palestinian men, women, and children slaughtered in Gaza by Israel comes from an investigation recently published by +972 Magazine and Local Call. Although Palantir is not mentioned by name, the AI systems discussed by the journalists appear to fit into the same category. According to the lengthy investigation, Unit 8200 is currently using a system called “Lavender” to target thousands of alleged Hamas fighters. But the magazine also reported that, while before the brutal attack on October 7, Israel’s rules of engagement tightly restricted the numbers of non-combatant casualties allowed in targeting a single alleged Hamas militant, such limitations have been loosened in the months since, to the point of allowing for the slaughter of dozens of Palestinian non-combatants (including women and children) for each targeted individual. President Joe Biden’s December warning that Israel was losing international support because of its “indiscriminate bombing” of Gaza appears to have had no effect.

Such actions were likely contributing factors in the recent decision by the United Nations Human Rights Council to adopt a resolution calling for Israel to be held accountable for possible war crimes and crimes against humanity committed in Gaza. Twenty-eight countries voted in favor, with only six voting against the resolution. Still, in the White House and on Capitol Hill, there seems little concern for the dangers of Israel’s deadly weaponization of AI and the technology’s connection to the staggering numbers of innocent men, women, children, and whole families massacred in Gaza.

Israel’s indiscriminate killing in Gaza offers the perfect example of why it’s time to also begin far stricter regulation of the export of AI systems, like those developed by Palantir. Systems that, as Karp suggested, are the digital equivalent of a weapon of mass destruction. After all, it’s not just the bomb that kills, but the list that puts you and your family under it.

The full article first appeared on The Nation.